- AWS at Scale

- Posts

- AWS at Scale #Main 4: Introducing an AWS Cloud Platform (Provider) to Consumer Model.

AWS at Scale #Main 4: Introducing an AWS Cloud Platform (Provider) to Consumer Model.

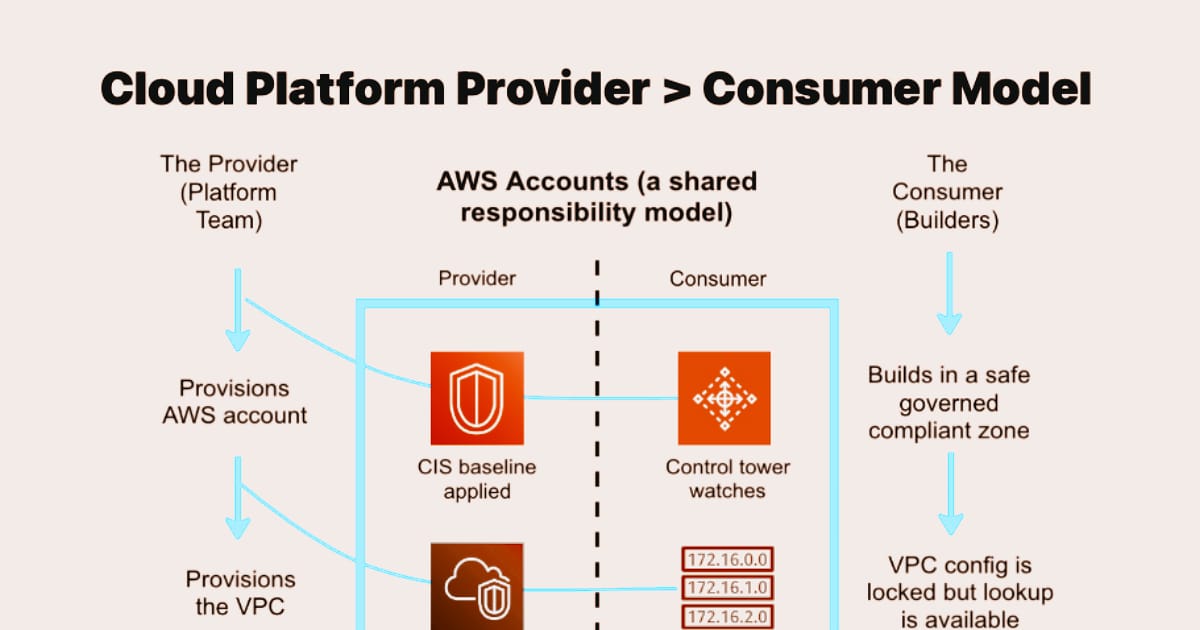

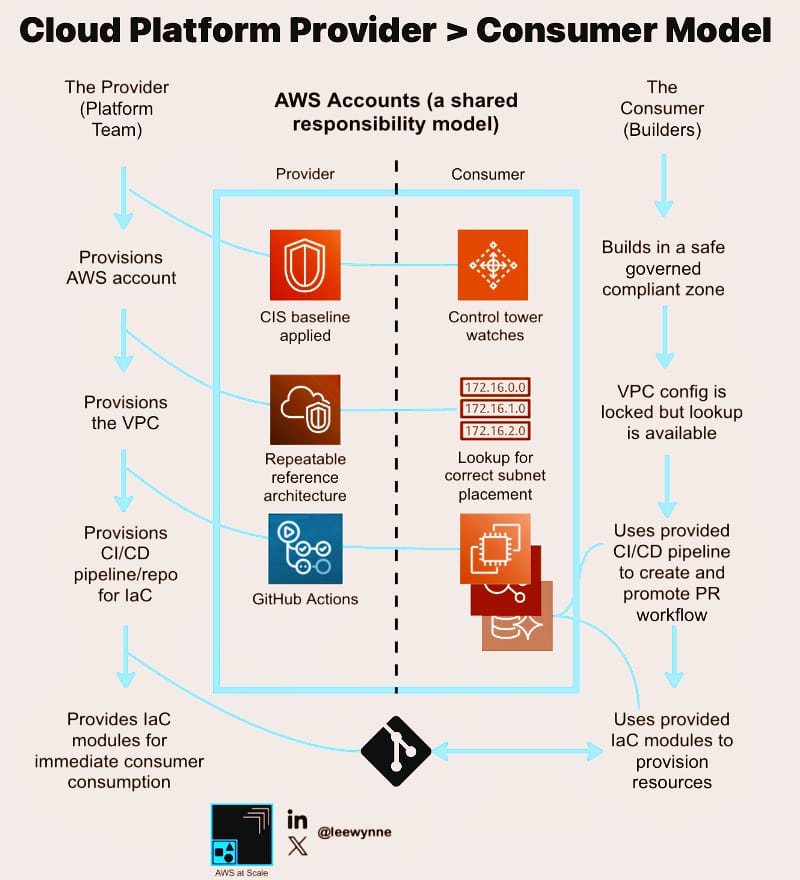

Before we get into cloud platform in action, here's an example of a cloud platform provider to consumer model.

Table of Contents

Introduction

I’d say any organisation doing AWS at a decent scale and wants to continue building whilst aligning with compliance, governance, principles, standards, policies and general guidelines whilst also maintaining a velocity of change and innovation that their organisation demands.

Should you integrate a provider > consumer model like this in a start-up or SME? Probably not, you’re more than likely to be wearing many hats anyway, but (and it’s a big but) if you scale quickly then you’re going to want to move out of the DIY account into something that is a bit more suitable for the ongoing growth of your development and DevOps teams as you expand out to more that one team.

If you’re doing AWS at some sort of scale then somewhere deeply embedded in the culture of your organisation will be the need to get moving on a provider (cloud platform) to consumer (the workload team) model. The alternative is that you can end up in Cloud hell with no clear lines of shared responsibility causing anti patterns to emerge, diluted standards, poor governance, a lack of general compliance (and the financial risk associated with that) to the point where you’ve looking to reboot and start all over again.

So what’s a cloud platform to consumer model? Let’s take a look.

The AWS Platform ‘Provider to Consumer‘ Model

Assume this is an AWS account. More specifically, the blue box with ‘provider‘ on the left and ‘consumer‘ on the right. The text around the outside is the segregation of responsibility between them:

Provider: Centralised cloud platform team

Consumer: Workload / builder team deploying resources

AWS at Scale - Cloud Platform Provider to Consumer Model

Note: This is an example of a workload specific AWS account. If this were a more critical workload, it would be 3 x AWS accounts (dev, stage and prod).

Reminder, don’t blend your AWS accounts across environments and workloads, it’s like crossing the streams in Ghostbusters!

FFS don’t put everything in one AWS Account 🙉

It’s also usually hosted in an AWS Landing Zone (likely with Control Tower enabled).

First up, split the responsibility into 2 groups. The provider (the cloud platform team), and the consumer (developers, engineers and devops).

So, what does the provider do?

AWS Account Provisioning

In this model, all AWS accounts are provisioned (on request) by the platform team. The consumer has no ability to provision accounts within your AWS Organisation or to attempt to invite an account that has been provisioned outside of your organisation into your organisation.

Why?

Key information needs to be provided/captured that will need to feed a suite of mandatory tags values, which in turn will feed into an overall technology data model (including a CMDB, CSDN and an enterprise services catalogue):

Key information like:

❓ Where’s the money coming from?

❓ What’s the cost code (for charge back)

❓ What is the tier of workload hosted within the account (business criticality)

❓ Forecasted spend (for budget control and notifications)

❓ Account level technical and security contacts (for incident management)

❓ Environment (prod/non-prod)

❓ Business division

❓ Workload alias

❓ Workload unique ID

Note: Don’t collect mandatory tag values unless they have a source of truth.

The account also needs to be security baselined:

🔐 CIS security baseline

🔐 Security Hub configuration

🔐 Guard Duty configuration

🔐 S3 block all public access fail-safe (likely from SCP)

🔐 Block local IAM without approval (with forced key rotation)

🔐 AWS config rules (likely from Control Tower)

🔐 Persistent account pattern applied (sandbox, dev/stage/prod, utility) no going into prod from a sandbox/dev account.

🔐 Detection of any sensitive information in datastores to drive data classification or secrets/credentials to notify & alert.

Consumers are usually focused on what they want to build, not the bigger picture / needs of the overall organisation (especially if you’re using contractors and suppliers). The integrity of the platform will be compromised if too much trust and control is handed over.

Implementing fail safes, permission boundaries, centralised config rules, governance and compliance ensures that your consumers are building in a safe zone, protecting them (and the organisation) from any poor practices stemming from the path of least resistance due to meeting tight deadlines or lack of general/enterprise cloud platform knowledge.

VPC Provisioning

VPCs are provisioned, on request, by the platform team at the time the account is provisioned (or later when requested).

Workload teams cannot provision a VPC.

Why?

☑️ Organisations need centralised visibility and control over their networks.

☑️ VPC design should be industrialised and standardised.

☑️ The consumer teams should have no ability to create a VPC or to reconfigure the VPC that’s provided to them.

☑️ VPCs should be reference architecture based and should be preconfigured out of the box to include east-west inspection, centralised egress inspection, subnet tiering, routing and automated IP checkout (if private address spacing is needed). Avoid NACLS (horrific to manage at scale) and use blackhole routing.

☑️ No VPC peering, put a fail safe in place, advise consumers to use PrivateLink first, that way they maintain control over the configuration through their own code base.

☑️ Only use a transit gateway for cross VPC traffic if absolutely necessary, this should be managed centrally but this can also introduced a bottleneck as configuration is outside of the consumers code based. Ideally your transit gateway should be used for core services only with preconfigured routing and port management (still passing through east-west inspection).

What can consumers do?

Consumers can manage CDNs/WAF, Security Groups and have the ability to lookup VPC configuration to successfully provision resources within the VPC but they can’t accidentally place virtual machines within public facing subnets.

Consumers can also deploy AWS PrivateLink to connect to AWS services across VPC’s and accounts. This works better as they have control and the configuration is embedded in their code pipeline rather than a centralised network team.

It’s worth putting the effort in here, mass poorly configured insecure VPC sprawl (including VPC peering) hosting many production workloads isn’t a situation that you want to find yourself in.

Consumer Facing CI/CD and IaC Repos

Consumer CI/CD and supporting repos are provisioned by the platform team at the time the account is provisioned.

Why?

If you’re doing AWS at Scale in a platform role already, consider how many different technology stacks that consumer teams have in place to support resource provisioning across your enterprise, does it look like this?

‼️ Jenkins

‼️ GitHub

‼️ BitBucket

‼️ GitLab

‼️ Travis CI

‼️ Azure DevOps

‼️ AWS Code Pipelines

‼️ GitLab

The list can feel endless and further exasperated by suppliers and contractors bringing their own CI/CD to projects.

This is how expensive distributed & decentralised DevOps sprawl starts, every environment is different, all needing their own support and DevOps teams, TOC is off the charts.

Instead, at least for cloud based resources, be opinionated here and standardise it. Provision CI/CD into the account vend it, and hand it over to the consumer teams for use, there’s many advantages of doing this:

↪️ Consistent DevOps experience across platforms resulting in smaller agile teams and lower TOC.

↪️ Drives IaC as a core principle, consumer teams need to be capable of building through code and automation (rather than clickops) resulting in resources and infrastructure that is auditable, repeatable, recoverable, supportable, consistent against principles & standards and has change management (via PR workflows) built in from the get go.

↪️ Ability to introduce reusable commoditised IaC modules to the consumer teams (more on this next), further reducing the TOC, distributed DevOps sprawl, complexity and inconsistent DevOps experience across workloads.

↪️ Centralised InfoSec teams can integrated code scanning and other code scanning into standardised CI/CD workflows, therefore gaining further insights and confidence across the complete code base.

↪️ Suppliers and contractors don’t need to quote for IaC CI/CD, further reducing costs.

↪️ Consumers are bootstrapped. When the account is provisioned, access to build is granted through the platform provisioned CI/CD and associated repo, consumers can start provisioning immediately.

Consumer Facing IaC Modules

Regardless of what your consumer teams are building, there will be many commodity items that can be modularised and provided centrally for general consumption, some good examples of AWS commodity resources worth modularising with infrastructure as code are:

➡️ S3

➡️ ECS/Fargate

➡️ Lambda

➡️ SQS / SNS

➡️ EventBridge

➡️ API GW

➡️ All ELBs

➡️ EC2 and ASGs

➡️ EBS

➡️ IAM Roles

➡️ RDS/Aurora

➡️ KMS

➡️ Route 53

➡️ VPC Endpoints

➡️ CloudWatch Alarms/Config

Stuff that highly reusable. I’m not saying that you do this for everything but for core commodity items, it’s highly recommended.

Why:

The benefits here are almost identical to provisioning CI/CD and IaC repos to consumers.

✅ Consistent DevOps experience

✅ Drives IaC as a core principle

✅ Reduces TOC, distributed DevOps sprawl, complexity and inconsistent DevOps experience across workloads.

✅ Increases InfoSec compliance confidence in code use.

✅ Suppliers and contractors don’t need to quote for it, further reducing costs.

✅ Consumers are bootstrapped. Commodity IaC modules are available for immediate consumption

✅ Consumers can bring their own code for non commodity items, but it still runs through the platform provisioned CI/CD workflow and associated repos.

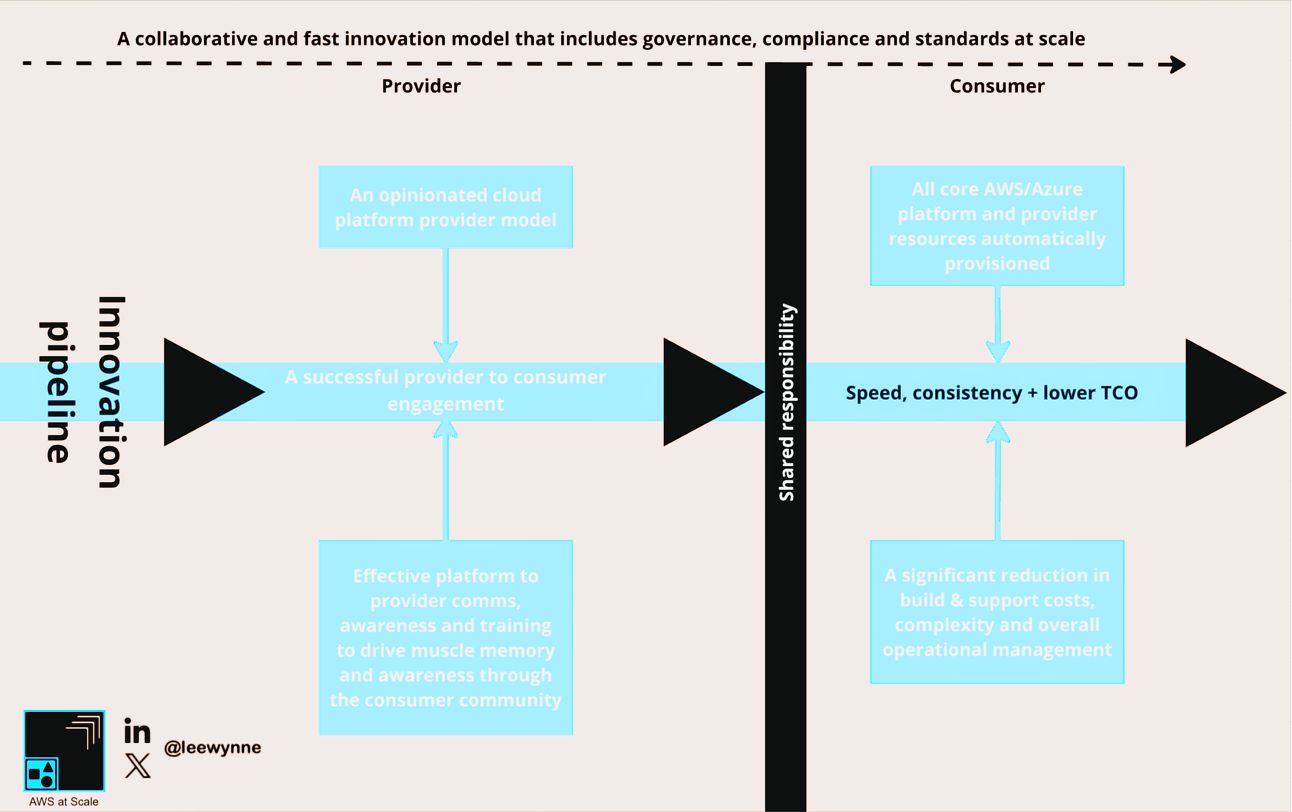

Platform Impact & Expected Outcomes

Within the provider-consumer model, you’ll engineer a suite of key features designed to bootstrap an AWS/Azure environment efficiently and at scale.

Provide to Consumer Innovation Pipeline

As outlined throughout this post, these features include

🦾 Automated AWS account creation

🦾 Amazon VPC reference architecture provisioning.

🦾 Consumer repo provisioning, consumer CI/CD pipeline provisioning,

🦾 Commoditised off the shelf infrastructure as code modules with built-in automated compliance and governance mechanisms.

By automating these foundational elements, the provider ensures that best practices are consistently applied across the environment, reducing the time and effort required to set up and manage these critical components.

As a direct result, consumers can focus entirely on building and deploying their applications & services without worrying about the complexities of infrastructure provisioning.

Additionally, this streamlined platform to consumer approach translates into significant cost savings to new supplier engagements as the automated setup reduces the need for our suppliers to design and build an appropriate landing zone, rather focusing all of their effort on accelerating workload development.

Help Out

Thank you again for subscribing to AWS at Scale. If you like my content then please visit these posts online and share them across your socials and support me by tagging me @leewynne

Reply